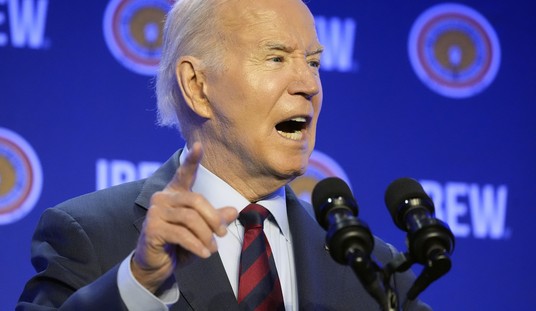

President Joe Biden on Monday issued an executive order intended to regulate the use of artificial intelligence by companies. The move is intended to represent a comprehensive government approach to harness the promise and mitigate the risk of AI technology in the United States.

But, like other state efforts to regulate industry, this particular plan has some serious flaws – especially related to the section on “equity” and “civil rights.”

Overall, the order centers on ramping up safety standards for AI. Among other things, it requires developers of high-risk systems to share their safety results with the state. It also includes a plethora of other measures, including the establishment of AI Safety and Security Boards. This will be enforced using the Defense Production Act.

One component of the executive order is that it seeks to use the power of the state to address “algorithmic discrimination” and ensure that AI technology somehow advances equity and civil rights.

Irresponsible uses of AI can lead to and deepen discrimination, bias, and other abuses in justice, healthcare, and housing. The Biden-Harris Administration has already taken action by publishing the Blueprint for an AI Bill of Rights and issuing an Executive Order directing agencies to combat algorithmic discrimination, while enforcing existing authorities to protect people’s rights and safety. To ensure that AI advances equity and civil rights, the President directs the following additional actions:

Provide clear guidance to landlords, Federal benefits programs, and federal contractors to keep AI algorithms from being used to exacerbate discrimination.

Address algorithmic discrimination through training, technical assistance, and coordination between the Department of Justice and Federal civil rights offices on best practices for investigating and prosecuting civil rights violations related to AI.

Ensure fairness throughout the criminal justice system by developing best practices on the use of AI in sentencing, parole and probation, pretrial release and detention, risk assessments, surveillance, crime forecasting and predictive policing, and forensic analysis.

This type of government intervention could stifle innovation by adding more layers of regulation and more hoops for AI developers to jump through. Moreover, the mechanism for promoting equity is wrapped in government-speak and ambiguity, which would likely cause more confusion.

Under Biden’s order, federal agencies are directed to coordinate “best practices,” which, in bureaucratic parlance, typically translates to more paperwork, regulations, red tape, and administrative annoyances. Small AI startups that do not possess the funding necessary to navigate the complex web of government requirements will find it even more challenging to foster innovation and the development of products that could help Americans.

Another issue is that mandating that developers use AI to promote civil rights could possibly create a situation in which these rights are being trampled. Increasing government control over this sector could make developers more hesitant to explore different avenues out of fear of running afoul of the state-imposed standards. It could halt or penalize an algorithm that is not inherently bad but somehow does not meet the arbitrary standards put in place by bureaucrats.

Lastly, putting the state in a position in which it serves as the ultimate arbiter of what is “equitable” could bring about a pernicious power dynamic. Giving them even this level of power will only lead to the government seizing more. What is to stop them from grabbing more authoritarian control over other areas of AI? With the makeup of our federal government, it is not outside the realm of possibility that politicians and bureaucrats would force AI developers to create products that promote ideas and policies rooted in far-left progressivism.

We have already seen how the government tried to pressure social media companies into suppressing users expressing dissenting views about COVID-19, vaccines, and elections, haven’t we? There can be no doubt that they would jump on the opportunity to mold AI into a propaganda tool that the government can use to ensure compliance from the public.

This will be an issue that Americans should watch closely. As is typical, the government is imposing measures that are supposedly intended to benefit the country. But, in reality, this order will only make the state more powerful.